Abstract

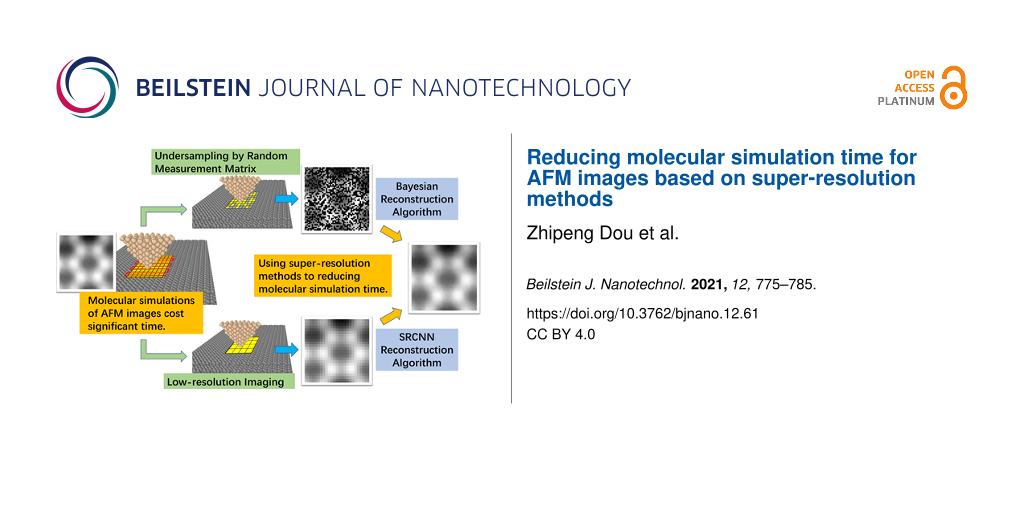

Atomic force microscopy (AFM) has been an important tool for nanoscale imaging and characterization with atomic and subatomic resolution. Theoretical investigations are getting highly important for the interpretation of AFM images. Researchers have used molecular simulation to examine the AFM imaging mechanism. With a recent flurry of researches applying machine learning to AFM, AFM images obtained from molecular simulation have also been used as training data. However, the simulation is incredibly time consuming. In this paper, we apply super-resolution methods, including compressed sensing and deep learning methods, to reconstruct simulated images and to reduce simulation time. Several molecular simulation energy maps under different conditions are presented to demonstrate the performance of reconstruction algorithms. Through the analysis of reconstructed results, we find that both presented algorithms could complete the reconstruction with good quality and greatly reduce simulation time. Moreover, the super-resolution methods can be used to speed up the generation of training data and vary simulation resolution for AFM machine learning.

Introduction

Atomic force microscopy methods are key tools for nanoscale imaging and characterization with unparalleled resolution [1]. The first atomic-resolution image by AFM of the (001) surface of NaCl was reported in ultrahigh vacuum [2]. Later, in noncontact mode, the reconstructed silicon (111)-(7×7) surface was imaged with 6 Å lateral and 0.1 Å vertical resolution [3]. Then, functionalizing the tip with closed-shell molecules and using a qPlus force sensor enabled the imaging of the internal structure of the molecules [4-7], resolving features of weak-bonded molecules [8-12], and measurement of bond-order relations [13-15]. The interpretation of AFM images, however, is often complicated. Theoretical investigations are getting highly important for the analysis of AFM images. Molecular simulation is a useful tool to study atomic-scale interactions. It has been used to examine the AFM imaging mechanism, study the factors affecting resolution [16-22], and establish an appropriate simulation methodology for the explanation of complex imaging mechanism in liquids [23-25]. Besides, there has been a recent flurry of researches applying machine learning to AFM, including predicting the molecular structure [26], recognizing and classifying surface features [27-29], and fast scanning [30,31]. The main problem in training models of machine learning is providing sufficiently labeled training data. High-resolution AFM experiments are time consuming and experimental data are, a priori, unlabeled, which would render the direct training on experimental data impractical. Researchers have used simulated AFM images as training data, in which correct labels are known a priori, and then the trained model correctly generalizes to real AFM scans [26,32,33]. Molecular simulation methods are effective for AFM image analysis and training data generation; however, it takes a lot of time to get a complete image of a sample surface. Molecular simulation imaging generally simulates the interaction of tip atoms in a point-to-point scan of a sample. An image contains hundreds of pixels and each pixel needs to be simulated once. The most direct way to reduce simulation time is to decrease the number of calculated points and reconstruct the whole image from undersampling points.

For the above problem, we could use super-resolution methods [34,35], which refers to a classical concept in computer vision. Super-resolution methods could be used to reconstruct a high-resolution image from a low-resolution image. There are a variety of methods in the field of super resolution. Compressed sensing (CS) and deep learning methods are two typical methods with excellent imaging performance [36], which use the principle of signal sparsity and the learning ability of deep neural networks to achieve super-resolution tasks. The CS has the possibility of recovering the data almost perfectly from undersampled information [37], which is widely used in AFM imaging to reduce sampling time [38-43]. The CS algorithm is mainly divided into three parts including the construction of the measurement matrix, signal sparsity, and image reconstruction. In some sense, taking a random measurement matrix is an optimal strategy for the acquirement of sparse signals [44]. The choice of the reconstruction algorithm is essential for CS. The Bayesian compressed sensing (BCS) can achieve a better recovery performance due to its high degree of sparsity and the ability to estimate the posterior distribution of the reconstructed signal [45-48], even if the signal is not sparse. In addition to using the signal sparsity, learning-based super-resolution reconstruction methods are also focused issues and trends in research. The super-resolution convolutional neural network (SRCNN) is the first neural network model for super-resolution reconstruction tasks [35]. The SRCNN consists of a three-layer convolutional neural network which directly learns an end-to-end mapping between low- and high-resolution images, making the reconstructed image as close to the original image as possible. Generally, increasing the network depth could improve the reconstruction accuracy. With the development of deep learning in super-resolution methods many other models have been proposed, such as VGG [49], Res Net [50], GAN [51], and VDSR [52]. The application of deep learning in super-resolution methods has been increasing in recent years, and it is also used in AFM to speed up imaging acquisition [31,53]. The abovementioned reconstruction algorithms are mostly aimed at real-scanned images, but they could have better applications in molecular simulation to speed up simulations.

In this paper, BCS and SRCNN methods are used to reconstruct the molecular simulation images to reduce simulation time. The energy maps of the interaction between several tips and samples under different conditions are simulated in dynamic and quasi-static modes. The molecular dynamics simulation details and main steps of reconstruction algorithms are presented. Then, several reconstruction results are conducted to compare the performance of these two algorithms. We find that both presented algorithms can complete the reconstruction with good quality and reduce the simulation time. In addition, we could use these two algorithms to speed up the generation of training data for AFM machine learning.

Methods

Molecular dynamics simulation

To test the effectiveness of the reconstruction algorithms we perform molecular dynamics simulations of AFM imaging in different conditions. The dynamic process (AM mode) and quasi-static process (the relative position of tip–sample remains unchanged in the simulation) are separately carried out. The simulation protocol for computing tip–sample interactions is similar to our previous work [54]. In the simulations, the tip consists of silicon atoms and it changes among different shapes, including cone, hemisphere, and single silicon atom. The conical tip height is 13 Å, the opening angle is 70°, and the hemispherical tip radius is 16 Å. We calculate the surface energy maps with a four-layer graphite and gold samples. The dimensions of the graphite and gold substrates are 9 × 9 × 1.1 and 9 × 9 × 0.4 nm3, respectively. All simulations are performed under equal height conditions. The schematic of the AM mode simulation model with conical tip apex is illustrated in Figure 1. The bottom layer atoms of the substrate are fixed to keep the sample stable. For the graphite substrate, the carbon–carbon interactions within each graphene layer are described by the AIREBO potential [55]. The Lennard–Jones (LJ) potential is used to describe the interaction between the graphene layers and the tip substrate. The LJ parameters for C–C are εC–C = 2.84 meV, σC–C = 0.34 nm and for Si–C the parameters are εSi–C = 8.909 meV, σSi–C = 0.3326 nm (ε is the depth of the potential well, σ is the finite distance). The cut-off distance for the C–C interaction is 1.19 nm. The cut-off distance for the Si–C interaction is changed to observe the impact on the simulation. Increasing the cut-off distance increases the number of atoms in the tip–sample interaction but the result is more accurate. For the gold substrate, the interaction between Au atoms is calculated by the embedded atom method (EAM) potential [56]. The LJ potential is employed to calculate the interaction between Si and Au (εSi–Au = 5.4297 meV, σSi–Au = 0.33801 nm). Due to the relatively large size of the substrate compared to the tip and a small cut-off distance, the effect of the boundary could be ignored. The NVT ensemble (the number of atoms, volume, and temperature are conserved) is employed to the free atoms in the system. All simulations are performed using the LAMMPS simulation software with a time step of 1 fs.

![[2190-4286-12-61-1]](/bjnano/content/figures/2190-4286-12-61-1.png?scale=2.0&max-width=1024&background=FFFFFF)

Figure 1: Snapshot of the AM mode molecular dynamics simulation. Zc is the distance between the virtual atom at rest and the sample surface. The dynamic response of the tip is determined by the excitation of the virtual atom and tip–sample interactions. In the simulation the tip is parallel to the sample surface and the scanning is done in raster scan path.

Figure 1: Snapshot of the AM mode molecular dynamics simulation. Zc is the distance between the virtual atom ...

In AM mode, a virtual atom is added above the tip apex and they are connected with a spring in the z-direction. The virtual atom is excited by a sinusoidal signal, mimicking the acoustic excitation of AM-AFM. The excited frequency is adjusted by the spring stiffness. A damping force is applied on the tip to ensure the correct damping of the tip oscillation. In the simulation, the initial distance between the virtual atom and the sample surface Zc remains unchanged at a suitable distance. Then we employ the raster scanning method to construct the average interplay energy map of the sample surface in a calculation period. In the scan, the tip is parallel to the sample surface in steps of 0.1 Å. After scanning 51 points, the tip turns to the next line until all are scanned. Then, the average energy map of the tip–sample interaction is obtained. In a quasi-static simulation, the tip is not excited and the conical tip is changed to a hemisphere and a single atom. The tip is typically scanned parallel to the surface at a fixed distance to the molecule being investigated. The raster scanning method is also applied for the quasi-static mode with a size of 101 × 101.

Bayesian compressed sensing

For linear systems, the signal acquisition process can be simulated by a linear equation. In the molecular simulation of an AFM image, every pixel of the image is calculated independently. A n × n simulated image can be seen as a 2D matrix and all rows of the matrix can form a vector x ∈ RN (N = n × n). The entire signal acquisition process can be described as

where x is the true value of the original signal, and y ∈ RM is the measured value of the original signal. The matrix Φ ∈ RM×M represents the linear measurement matrix that obtains the M-dimensional measurement results in the N-dimensional space, as shown in Figure 2. If M < N, it means that the linear observation system is underdetermined and we could use the sparsity of the signal to complete the reconstruction.

![[2190-4286-12-61-2]](/bjnano/content/figures/2190-4286-12-61-2.png?scale=2.0&max-width=1024&background=FFFFFF)

Figure 2: Mathematical model of compressed-sensing AFM imaging. The image obtained by AFM can be regarded as a two-dimensional matrix, and each pixel of the image is an element of the matrix. The imaging process can be regarded as collecting the element of the vector x, the Φ is the measurement matrix, and y is the measurement result.

Figure 2: Mathematical model of compressed-sensing AFM imaging. The image obtained by AFM can be regarded as ...

Signal sparsity means that there are only few non-zero elements contained in the signal. The simulated AFM images are usually not sparse but will show sparsity after the discrete cosine transform (DCT). Supposing that the DCT operation is Ψ, Equation 1 is rewritten as

where y ∈ RM is a compressed sampling signal for the original signal x ∈ RN, and its dimension is smaller than the original signal (M < N). The original signal x can be represented by the coefficient vector s of K sparse under the transform domain of the sparse basis Ψ. The product of the measurement matrix and the sparse transform matrix is represented by θ = ΦΨ. Such a CS framework can recover linear equations with unique sparse solutions.

The designs of the measurement matrix and reconstruction algorithms are important for CS algorithms. We set the measurement matrix to a random matrix for an idealized CS application and a fast reconstruction algorithm based on BCS is used to reconstruct the images. Since the measurement is random, the conditional probability density function of the measurement can be described by a zero-mean Gaussian distribution with variance σ2. The CS reconstruction problem is converted into a linear regression problem and the parameter s can be obtained by the maximum likelihood method. The details of the BCS reconstruction algorithm in AFM are given in our previous work [47].

The whole operation process is shown in Figure 3. First, we need to generate a random measurement matrix according to the size of the final image and the number of compressed sampling points. For molecular simulation, the measurement matrix needs to be transformed into the required sampling position. Then, the interaction energy between tip and sample is calculated according to the sampling position. After that, we would get the undersampling images of the surface. Finally, the BCS reconstruction algorithm is used to reconstruct the whole images.

![[2190-4286-12-61-3]](/bjnano/content/figures/2190-4286-12-61-3.png?scale=2.0&max-width=1024&background=FFFFFF)

Figure 3: The block diagram of fast molecular simulation method for AFM imaging based on Bayesian compressed sensing. First, the random measurement matrix is obtained, then the pixel value is simulated according to random matrix positions, and finally the BCS method is used to reconstruct the complete image.

Figure 3: The block diagram of fast molecular simulation method for AFM imaging based on Bayesian compressed ...

Super-resolution convolutional neural network

SRCNN is a representative method for deep learning super-resolution techniques and consists of three layers. This method uses bicubic interpolation as the preprocessing step and, then, extracts overlapping image blocks as high-dimensional vectors with the same dimension as the feature image through convolution. After that, the high-dimensional vectors are nonlinearly mapped to each other and comprise another set of feature maps. Finally, they are aggregated into patches to obtain a reconstructed high-resolution image. A SRCNN is constructed to obtain a super-resolution image from a low-resolution simulated image [35]. Filters of spatial sizes of 9 × 9, 5 × 5, and 5 × 5 were used.

The flow chart of the proposed SRCNN reconstruction method is shown in Figure 4. First, we use molecular dynamics simulation to obtain energy maps of the tip–sample interaction with low-resolution. Different from the full image simulation, the scanning step size of a low-resolution image is increased by two or three times. The simulated points of the AFM images are reduced to 1/4 and 1/9, respectively. Then the low-resolution image is expanded to the desired size through bicubic interpolation, which is denoted as X. The first layer is operated to extract the image feature of X as an operation F1:

where W1 represents 64 types of convolution kernels with the size of 9 × 9, and B1 represents the biases. The first layer extracts a 64-dimensional feature for each patch. Furthermore, the non-linear mapping is on a 5 × 5 “patch” of the feature map as follows:

where W2 and B2 represent 32 types of convolution kernels with the size of 5 × 5 and the biases, respectively. The final super-resolution image is generated with the reconstruction layer as follows:

where W3 and B3 represent a linear filter with the size of 5 × 5 and the bias, respectively. The network parameters Θ = {W1, B1, W2, B2, W3, B3} are trained to construct the end-to-end mapping between low- and high-resolution images. Given a set of high-resolution images {Yi} and their corresponding low-resolution images {Xi}, the mean squared error is used as the loss function:

where n is the number of training samples. The parameters are updated with the gradient descent as

where η denotes the learning rate.

![[2190-4286-12-61-4]](/bjnano/content/figures/2190-4286-12-61-4.png?scale=2.0&max-width=1024&background=FFFFFF)

Figure 4: The flow chart of the proposed SRCNN reconstruction method. First, the low-resolution simulated image is obtained, then the SRCNN method is used to reconstruct the high-resolution image. The SRCNN consists of three layers to implement feature extraction, non-linear mapping, and reconstruction, respectively.

Figure 4: The flow chart of the proposed SRCNN reconstruction method. First, the low-resolution simulated ima...

Results and Discussion

Molecular dynamics simulation results

Molecular dynamics simulation is used to obtain the energy maps of graphite and gold samples under different conditions for reconstruction, as shown in Figure 5. The first two rows represent the graphite sample and the last row is the gold sample. Figure 5a represents the interaction between the conical tip and the graphite sample in AM mode, as described in Figure 1. In this situation, the tip atoms intermittently interact with the sample in oscillation periods. The size effect of the tip apex is not obvious and the energy map is mainly affected by the height of the sample atoms. In the AM mode, we need 5000 time steps to keep the tip in a stable vibration to, then, calculate the average interaction energy. The inter-layer and intra-layer C–C interactions in the graphite sample are different and need to be calculated separately. In this simulation, one pixel would take about six minutes; therefore, a 51 × 51 image would take approximately ten days to be simulated. The other images of Figure 5 are simulated under quasi-static conditions. The tip remains stationary at an appropriate position on the sample surface and there is no need to spend time making the tip vibrate. Images of sizes 101 × 101 for graphite and gold samples with 2000 time steps would cost 56 and two hours, respectively. For more complex sample structures and potentials, especially electronic structures, may take more time. In quasi-static simulations, we could find that the tip shapes have a great influence on the results and are convolved into the energy maps. Different simulation conditions would also affect the final imaging results, such as tip–sample height and cut-off distance, for example. We generally need to perform multiple simulations to determine the appropriate simulation conditions. However, the number of calculation points required to form a whole image is huge, so it is necessary to shorten the simulation time by reducing the number of sampling points.

![[2190-4286-12-61-5]](/bjnano/content/figures/2190-4286-12-61-5.jpg?scale=2.0&max-width=1024&background=FFFFFF)

Figure 5: The interaction energy maps of graphite and gold samples obtained by molecular dynamics simulation. The size of the first image is 51 × 51 and the other images are 101 × 101. (a) The conical tip interacts with the graphite sample in AM mode. (b–f) The single Si atom tip interacts with the graphite sample at 7 Å. In (d) the cut-off distance of the tip–sample increases by 2 Å. In (e) the tip atom drops by 2 Å. (g) The hemispherical tip interacts with the gold sample. (h–i) The single Si atom tip interacts with the gold sample. In the simulations on the far-right column (c,f,i) we removed one atom from the sample surface.

Figure 5: The interaction energy maps of graphite and gold samples obtained by molecular dynamics simulation....

Image reconstruction

In this part, we compare the performance of BCS and SRCNN algorithms for the simulated AFM images. Figure 5a with a size of 51 × 51 is used to test the impact of the sampling points on the reconstructed results, as shown in Figure 6. Generally, an undersampling rate of 0.5 could ensure a better reconstruction effect for BCS [47]. This image contains 2601 pixels and the undersampling rates of 0.11 (298 pixels), 0.30 (784 pixels), and 0.50 (1300 pixels) are chosen to reconstruct the complete image. For SRCNN, we set the scale factors to 2× (25 × 25) and 3× (17 × 17), respectively, to construct super-resolution images. The peak signal to noise ratio (PSNR, expressed as the logarithmic decibel scale) and structural similarity (SSIM, value range is [0,1]) are used to evaluate the quality of the reconstructed image. The PSNR is usually used to determine the image quality loss after image compression, denoising, and reconstruction. The SSIM is a measure of the similarity between two images. From Figure 6 we could find that with an increase in the undersampling rate, the reconstruction quality gradually improves. The reconstructed quality of the BCS method is mainly related to the undersampling rate. When the undersampling rate is higher than the sparsity of the image, the reconstructed quality hardly changes [47]. An undersampling rate of 0.5 could guarantee a good reconstruction quality. This means that we only need to calculate half of the points to reconstruct a complete simulated image by the BCS method. For the SRCNN method, both 2× and 3× scale factors could reconstruct the image well and the reconstructed quality is better than that of BCS. These results indicate that we could use SRCNN to reduce the simulation time to 1/4 and 1/9 of the original time, respectively. Overall, it shows that for simulated images with less details, two algorithms have a better reconstruction performance. However, BCS requires more sampling points to ensure reconstruction quality. Besides, the time spent on the reconstruction algorithm is negligible relative to the molecular dynamics simulation.

![[2190-4286-12-61-6]](/bjnano/content/figures/2190-4286-12-61-6.jpg?scale=2.0&max-width=1024&background=FFFFFF)

Figure 6: The reconstructed results of Figure 5a with size of 51 × 51. The first column is the original image, the second column is the undersampling image (or low-resolution image), and the last column is the reconstructed image. PSNR and SSIM are indicated on the right side of each image. The first three lines are the BCS results with under-sampling rates of 0.11, 0.3, and 0.5, respectively. The last two lines are the SRCNN results with scale factors of 2× and 3×, respectively.

Figure 6: The reconstructed results of Figure 5a with size of 51 × 51. The first column is the original image, the sec...

The other images are reconstructed to compare the differences between two reconstruction algorithms. Figure 7 presents the reconstructed results of Figure 5h, which has more details and a larger size of 101 × 101. The undersampling rate of BCS is 0.5 and the scale factors of SRCNN are 2× and 3×. From the final reconstructed images we could find that for the image with more details both algorithms could complete the reconstruction. However, the quality of the image is reduced compared to the previous image. The PSNR and SSIM values of the first two lines in Figure 7 have little difference, but we could find from the detailed images on the right that the reconstructed quality of BCS is better than that of SRCNN and the details of the images are well reconstructed.

![[2190-4286-12-61-7]](/bjnano/content/figures/2190-4286-12-61-7.jpg?scale=2.0&max-width=1024&background=FFFFFF)

Figure 7: The reconstructed results of Figure 5h with size of 101 × 101. The first line is the result of the BCS algorithm with an undersampling rate of 0.5. The last two lines are the results of the SRCNN algorithm with scale factors of 2× and 3×, respectively. The small images on the right show the details of the reconstructed image in the red box.

Figure 7: The reconstructed results of Figure 5h with size of 101 × 101. The first line is the result of the BCS algor...

Other results are shown in Figure 8. Overall, both presented algorithms can complete the reconstruction. For images with less details, both algorithms can recover the simulated image from undersampled data with a high quality. The SRCNN method needs less simulated points and can reduce the simulation time to 1/9. However, for images with more details, SRCNN has poor quality on detail reconstruction. The BCS method could reconstruct the details with an undersampling rate of 0.5, which means that it could save half of the simulation time. Besides, more sampling points can ensure better reconstruction quality.

![[2190-4286-12-61-8]](/bjnano/content/figures/2190-4286-12-61-8.jpg?scale=2.0&max-width=1024&background=FFFFFF)

Figure 8: Reconstructed results of the other seven images. The upper image of each group shows the result of the BCS reconstruction with an undersampling rate of 0.5, and the lower image represents the SRCNN reconstruction with a scale factor of 2×. PSNR and SSIM are indicated below each image.

Figure 8: Reconstructed results of the other seven images. The upper image of each group shows the result of ...

Conclusion

In this paper, BCS and SRCNN methods are applied to reconstruct the molecular simulation images to reduce the simulation time. Several energy maps of graphite and gold samples under different conditions are simulated for image reconstruction. Both presented algorithms can reduce the time of molecular dynamics simulation and complete the reconstruction with good quality. Through undersampling and low-resolution mapping we could reduce the number of calculated points by 1/2 and 1/9, which means that the BCS method can reduce the simulation time by one half while the SRCNN method can reduce the simulation time to 1/9. By comparing the reconstruction results with different details, it can be found that SRCNN has better reconstruction quality when there are fewer details and BCS is better for detail reconstruction. For a preliminary simulation, we could choose the SRCNN method to achieve faster simulation and have a preliminary judgment on the final simulated results. If pursuing quality, the BCS method could be used to achieve a balance between time and quality while more sampling points can ensure better reconstruction quality. Besides, researchers typically use nearest-neighbor interpolation for upscaling the training images for the scale invariance in machine learning. The super-resolution methods could also be applied to vary simulation resolution, which is more accurate. In conclusion, these two methods can be used to speed up molecular simulation imaging and the generation of training data for AFM machine learning.

References

-

Binnig, G.; Quate, C. F.; Gerber, C. Phys. Rev. Lett. 1986, 56, 930–933. doi:10.1103/physrevlett.56.930

Return to citation in text: [1] -

Meyer, G.; Amer, N. M. Appl. Phys. Lett. 1990, 56, 2100–2101. doi:10.1063/1.102985

Return to citation in text: [1] -

Giessibl, F. J. Science 1995, 267, 68–71. doi:10.1126/science.267.5194.68

Return to citation in text: [1] -

Gross, L.; Mohn, F.; Moll, N.; Liljeroth, P.; Meyer, G. Science 2009, 325, 1110–1114. doi:10.1126/science.1176210

Return to citation in text: [1] -

Fatayer, S.; Albrecht, F.; Zhang, Y.; Urbonas, D.; Peña, D.; Moll, N.; Gross, L. Science 2019, 365, 142–145.

Return to citation in text: [1] -

Ellner, M.; Pou, P.; Pérez, R. ACS Nano 2019, 13, 786–795. doi:10.1021/acsnano.8b08209

Return to citation in text: [1] -

Gross, L.; Schuler, B.; Pavliček, N.; Fatayer, S.; Majzik, Z.; Moll, N.; Peña, D.; Meyer, G. Angew. Chem., Int. Ed. 2018, 57, 3888–3908. doi:10.1002/anie.201703509

Return to citation in text: [1] -

Sweetman, A. M.; Jarvis, S. P.; Sang, H.; Lekkas, I.; Rahe, P.; Wang, Y.; Wang, J.; Champness, N. R.; Kantorovich, L.; Moriarty, P. Nat. Commun. 2014, 5, 3931. doi:10.1038/ncomms4931

Return to citation in text: [1] -

Zhang, J.; Chen, P.; Yuan, B.; Ji, W.; Cheng, Z.; Qiu, X. Science 2013, 342, 611–614. doi:10.1126/science.1242603

Return to citation in text: [1] -

Sweetman, A.; Rahe, P.; Moriarty, P. Nano Lett. 2014, 14, 2265–2270. doi:10.1021/nl4041803

Return to citation in text: [1] -

Emmrich, M.; Huber, F.; Pielmeier, F.; Welker, J.; Hofmann, T.; Schneiderbauer, M.; Meuer, D.; Polesya, S.; Mankovsky, S.; Kodderitzsch, D.; Ebert, H.; Giessibl, F. J. Science 2015, 348, 308–311. doi:10.1126/science.aaa5329

Return to citation in text: [1] -

Boneschanscher, M. P.; Hämäläinen, S. K.; Liljeroth, P.; Swart, I. ACS Nano 2014, 8, 3006–3014. doi:10.1021/nn500317r

Return to citation in text: [1] -

Gross, L.; Mohn, F.; Moll, N.; Schuler, B.; Criado, A.; Guitian, E.; Pena, D.; Gourdon, A.; Meyer, G. Science 2012, 337, 1326–1329. doi:10.1126/science.1225621

Return to citation in text: [1] -

Fan, D.; Sakai, Y.; Chelikowsky, J. R. Nano Lett. 2019, 19, 5562–5567. doi:10.1021/acs.nanolett.9b02097

Return to citation in text: [1] -

Pavliček, N.; Schuler, B.; Collazos, S.; Moll, N.; Pérez, D.; Guitián, E.; Meyer, G.; Peña, D.; Gross, L. Nat. Chem. 2015, 7, 623–628. doi:10.1038/nchem.2300

Return to citation in text: [1] -

Komiyama, M.; Tsujimichi, K.; Tazawa, K.; Hirotani, A.; Yamano, H.; Kubo, M.; Broclawik, E.; Miyamoto, A. Surf. Sci. 1996, 357–358, 222–227. doi:10.1016/0039-6028(96)00097-0

Return to citation in text: [1] -

Liang, Y.; Dou, J.; Bai, Q.; Wang, S.; Chen, M.; Zhao, Y.; Dong, S. Subatomic imaging of Si (001) surface by molecular dynamic simulation. In 2006 1st IEEE International Conference on Nano/Micro Engineered and Molecular Systems, Vols 1-3, International Conference on Nano-Micro Engineered and Molecular Systems NEMS; 2006; pp 1156–1159. doi:10.1109/nems.2006.334670

Return to citation in text: [1] -

Belikov, S.; Magonov, S. Jpn. J. Appl. Phys., Part 1 2006, 45, 2158–2165. doi:10.1143/jjap.45.2158

Return to citation in text: [1] -

Loske, F.; Rahe, P.; Kühnle, A. Nanotechnology 2009, 20, 264010. doi:10.1088/0957-4484/20/26/264010

Return to citation in text: [1] -

Pishkenari, H. N.; Meghdari, A. Curr. Appl. Phys. 2010, 10, 583–591. doi:10.1016/j.cap.2009.08.002

Return to citation in text: [1] -

Kheirodin, M.; Pishkenari, H. N.; Moosavi, A.; Meghdari, A. Nano 2015, 10, 1550096. doi:10.1142/s1793292015500964

Return to citation in text: [1] -

van der Lit, J.; Di Cicco, F.; Hapala, P.; Jelinek, P.; Swart, I. Phys. Rev. Lett. 2016, 116, 096102. doi:10.1103/physrevlett.116.096102

Return to citation in text: [1] -

Reischl, B.; Watkins, M.; Foster, A. S. J. Chem. Theory Comput. 2013, 9, 600–608. doi:10.1021/ct3008342

Return to citation in text: [1] -

Fukuma, T.; Reischl, B.; Kobayashi, N.; Spijker, P.; Canova, F. F.; Miyazawa, K.; Foster, A. S. Phys. Rev. B 2015, 92, 155412. doi:10.1103/physrevb.92.155412

Return to citation in text: [1] -

Tracey, J.; Miyazawa, K.; Spijker, P.; Miyata, K.; Reischl, B.; Canova, F. F.; Rohl, A. L.; Fukuma, T.; Foster, A. S. Nanotechnology 2016, 27, 415709. doi:10.1088/0957-4484/27/41/415709

Return to citation in text: [1] -

Alldritt, B.; Hapala, P.; Oinonen, N.; Urtev, F.; Krejci, O.; Federici Canova, F.; Kannala, J.; Schulz, F.; Liljeroth, P.; Foster, A. S. Sci. Adv. 2020, 6, eaay6913. doi:10.1126/sciadv.aay6913

Return to citation in text: [1] [2] -

Cellini, F.; Lavini, F.; Berger, C.; de Heer, W.; Riedo, E. 2D Mater. 2019, 6, 035043. doi:10.1088/2053-1583/ab1b9f

Return to citation in text: [1] -

Borodinov, N.; Tsai, W.-Y.; Korolkov, V. V.; Balke, N.; Kalinin, S. V.; Ovchinnikova, O. S. Appl. Phys. Lett. 2020, 116, 044103. doi:10.1063/1.5135328

Return to citation in text: [1] -

Huang, B.; Li, Z.; Li, J. Nanoscale 2018, 10, 21320–21326. doi:10.1039/c8nr06734a

Return to citation in text: [1] -

Kelley, K. P.; Ziatdinov, M.; Collins, L.; Susner, M. A.; Vasudevan, R. K.; Balke, N.; Kalinin, S. V.; Jesse, S. Small 2020, 16, 2002878. doi:10.1002/smll.202002878

Return to citation in text: [1] -

Wu, Y.; Fang, Y.; Wang, C.; Liu, C.; Fan, Z. Ultramicroscopy 2020, 213, 112991. doi:10.1016/j.ultramic.2020.112991

Return to citation in text: [1] [2] -

Gordon, O. M.; Hodgkinson, J. E. A.; Farley, S. M.; Hunsicker, E. L.; Moriarty, P. J. Nano Lett. 2020, 20, 7688–7693. doi:10.1021/acs.nanolett.0c03213

Return to citation in text: [1] -

Oxley, M. P.; Yin, J.; Borodinov, N.; Somnath, S.; Ziatdinov, M.; Lupini, A. R.; Jesse, S.; Vasudevan, R. K.; Kalinin, S. V. Mach. Learn.: Sci. Technol. 2020, 1, 04LT01. doi:10.1088/2632-2153/aba32d

Return to citation in text: [1] -

Yang, J.; Wright, J.; Huang, T. S.; Ma, Y. IEEE Trans. Image Process. 2010, 19, 2861–2873. doi:10.1109/tip.2010.2050625

Return to citation in text: [1] -

Dong, C.; Loy, C. C.; He, K.; Tang, X. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. doi:10.1109/tpami.2015.2439281

Return to citation in text: [1] [2] [3] -

Timofte, R., Ed. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2017. doi:10.1109/cvprw.2017.150

Return to citation in text: [1] -

Candes, E. J.; Tao, T. IEEE Trans. Inf. Theory 2006, 52, 5406–5425. doi:10.1109/tit.2006.885507

Return to citation in text: [1] -

Song, B.; Xi, N.; Yang, R.; Lai, K. W. C.; Qu, C. In 2011 11th IEEE International Conference on Nanotechnology, Portland, OR, USA, Aug 15–18, 2011; IEEE, 2011; pp 1056–1059. doi:10.1109/nano.2011.6144587

Return to citation in text: [1] -

Maxwell, B. D.; Andersson, S. B. In 2014 American Control Conference, Portland, OR, USA, June 4–6, 2014; IEEE, 2014; pp 1631–1636. doi:10.1109/acc.2014.6858710

Return to citation in text: [1] -

Andersson, S. B.; Pao, L. Y. In 2012 American Control Conference (ACC), Montreal, QC, Canada, June 27–29, 2012; IEEE, 2012; pp 2485–2490. doi:10.1109/acc.2012.6315406

Return to citation in text: [1] -

Arildsen, T.; Oxvig, C. S.; Pedersen, P. S.; Ostergaard, J.; Larsen, T. IEEE J. Sel. Top. Signal Process. 2016, 10, 31–46. doi:10.1109/jstsp.2015.2500363

Return to citation in text: [1] -

Han, G.; Lin, B. Ultramicroscopy 2018, 189, 85–94. doi:10.1016/j.ultramic.2018.03.019

Return to citation in text: [1] -

Luo, Y.; Andersson, S. B. Nanotechnology 2015, 26, 505703. doi:10.1088/0957-4484/26/50/505703

Return to citation in text: [1] -

Candès, E.; Romberg, J. Inverse Probl. 2007, 23, 969–985. doi:10.1088/0266-5611/23/3/008

Return to citation in text: [1] -

Ji, S.; Xue, Y.; Carin, L. IEEE Trans. Signal Process. 2008, 56, 2346–2356. doi:10.1109/tsp.2007.914345

Return to citation in text: [1] -

Babacan, S. D.; Molina, R.; Katsaggelos, A. K. IEEE Trans. Image Process. 2010, 19, 53–63. doi:10.1109/tip.2009.2032894

Return to citation in text: [1] -

Zhang, Y.; Li, Y.; Wang, Z.; Song, Z.; Lin, R.; Qian, J.; Yao, J. Meas. Sci. Technol. 2019, 30, 025402. doi:10.1088/1361-6501/aaf4e7

Return to citation in text: [1] [2] [3] [4] -

Zhang, Y.; Li, Y.; Song, Z.; Wang, Z.; Qian, J.; Yao, J. Beilstein J. Nanotechnol. 2019, 10, 2346–2356. doi:10.3762/bjnano.10.225

Return to citation in text: [1] -

Simonyan, K.; Zisserman, A. arXiv 2014, No. 1409.1556.

Return to citation in text: [1] -

He, K.; Zhang, X.; Ren, S.; Sun, J. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 27–30, 2016; IEEE: Las Vegas, NV, USA, 2016; pp 770–778. doi:10.1109/cvpr.2016.90

Return to citation in text: [1] -

Goodfellow, I. J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. In Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2, Montreal, Canada, Dec 8–13, 2014; MIT Press, 2014; pp 2672–2680. doi:10.1109/vlsid.2014.109

Return to citation in text: [1] -

Kim, J.; Lee, J. K.; Lee, K. M. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 27–30, 2016; IEEE: Seattle, WA, 2016; pp 1646–1654. doi:10.1109/cvpr.2016.181

Return to citation in text: [1] -

Luo, Y.; Andersson, S. B. Micron 2020, 130, 102814. doi:10.1016/j.micron.2019.102814

Return to citation in text: [1] -

Dou, Z.; Qian, J.; Li, Y.; Wang, Z.; Zhang, Y.; Lin, R.; Wang, T. Ultramicroscopy 2020, 212, 112971. doi:10.1016/j.ultramic.2020.112971

Return to citation in text: [1] -

Stuart, S. J.; Tutein, A. B.; Harrison, J. A. J. Chem. Phys. 2000, 112, 6472–6486. doi:10.1063/1.481208

Return to citation in text: [1] -

Foiles, S. M.; Baskes, M. I.; Daw, M. S. Phys. Rev. B 1986, 33, 7983–7991. doi:10.1103/physrevb.33.7983

Return to citation in text: [1]

| 47. | Zhang, Y.; Li, Y.; Wang, Z.; Song, Z.; Lin, R.; Qian, J.; Yao, J. Meas. Sci. Technol. 2019, 30, 025402. doi:10.1088/1361-6501/aaf4e7 |

| 35. | Dong, C.; Loy, C. C.; He, K.; Tang, X. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. doi:10.1109/tpami.2015.2439281 |

| 47. | Zhang, Y.; Li, Y.; Wang, Z.; Song, Z.; Lin, R.; Qian, J.; Yao, J. Meas. Sci. Technol. 2019, 30, 025402. doi:10.1088/1361-6501/aaf4e7 |

| 1. | Binnig, G.; Quate, C. F.; Gerber, C. Phys. Rev. Lett. 1986, 56, 930–933. doi:10.1103/physrevlett.56.930 |

| 8. | Sweetman, A. M.; Jarvis, S. P.; Sang, H.; Lekkas, I.; Rahe, P.; Wang, Y.; Wang, J.; Champness, N. R.; Kantorovich, L.; Moriarty, P. Nat. Commun. 2014, 5, 3931. doi:10.1038/ncomms4931 |

| 9. | Zhang, J.; Chen, P.; Yuan, B.; Ji, W.; Cheng, Z.; Qiu, X. Science 2013, 342, 611–614. doi:10.1126/science.1242603 |

| 10. | Sweetman, A.; Rahe, P.; Moriarty, P. Nano Lett. 2014, 14, 2265–2270. doi:10.1021/nl4041803 |

| 11. | Emmrich, M.; Huber, F.; Pielmeier, F.; Welker, J.; Hofmann, T.; Schneiderbauer, M.; Meuer, D.; Polesya, S.; Mankovsky, S.; Kodderitzsch, D.; Ebert, H.; Giessibl, F. J. Science 2015, 348, 308–311. doi:10.1126/science.aaa5329 |

| 12. | Boneschanscher, M. P.; Hämäläinen, S. K.; Liljeroth, P.; Swart, I. ACS Nano 2014, 8, 3006–3014. doi:10.1021/nn500317r |

| 37. | Candes, E. J.; Tao, T. IEEE Trans. Inf. Theory 2006, 52, 5406–5425. doi:10.1109/tit.2006.885507 |

| 4. | Gross, L.; Mohn, F.; Moll, N.; Liljeroth, P.; Meyer, G. Science 2009, 325, 1110–1114. doi:10.1126/science.1176210 |

| 5. | Fatayer, S.; Albrecht, F.; Zhang, Y.; Urbonas, D.; Peña, D.; Moll, N.; Gross, L. Science 2019, 365, 142–145. |

| 6. | Ellner, M.; Pou, P.; Pérez, R. ACS Nano 2019, 13, 786–795. doi:10.1021/acsnano.8b08209 |

| 7. | Gross, L.; Schuler, B.; Pavliček, N.; Fatayer, S.; Majzik, Z.; Moll, N.; Peña, D.; Meyer, G. Angew. Chem., Int. Ed. 2018, 57, 3888–3908. doi:10.1002/anie.201703509 |

| 38. | Song, B.; Xi, N.; Yang, R.; Lai, K. W. C.; Qu, C. In 2011 11th IEEE International Conference on Nanotechnology, Portland, OR, USA, Aug 15–18, 2011; IEEE, 2011; pp 1056–1059. doi:10.1109/nano.2011.6144587 |

| 39. | Maxwell, B. D.; Andersson, S. B. In 2014 American Control Conference, Portland, OR, USA, June 4–6, 2014; IEEE, 2014; pp 1631–1636. doi:10.1109/acc.2014.6858710 |

| 40. | Andersson, S. B.; Pao, L. Y. In 2012 American Control Conference (ACC), Montreal, QC, Canada, June 27–29, 2012; IEEE, 2012; pp 2485–2490. doi:10.1109/acc.2012.6315406 |

| 41. | Arildsen, T.; Oxvig, C. S.; Pedersen, P. S.; Ostergaard, J.; Larsen, T. IEEE J. Sel. Top. Signal Process. 2016, 10, 31–46. doi:10.1109/jstsp.2015.2500363 |

| 42. | Han, G.; Lin, B. Ultramicroscopy 2018, 189, 85–94. doi:10.1016/j.ultramic.2018.03.019 |

| 43. | Luo, Y.; Andersson, S. B. Nanotechnology 2015, 26, 505703. doi:10.1088/0957-4484/26/50/505703 |

| 34. | Yang, J.; Wright, J.; Huang, T. S.; Ma, Y. IEEE Trans. Image Process. 2010, 19, 2861–2873. doi:10.1109/tip.2010.2050625 |

| 35. | Dong, C.; Loy, C. C.; He, K.; Tang, X. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. doi:10.1109/tpami.2015.2439281 |

| 2. | Meyer, G.; Amer, N. M. Appl. Phys. Lett. 1990, 56, 2100–2101. doi:10.1063/1.102985 |

| 36. | Timofte, R., Ed. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2017. doi:10.1109/cvprw.2017.150 |

| 26. | Alldritt, B.; Hapala, P.; Oinonen, N.; Urtev, F.; Krejci, O.; Federici Canova, F.; Kannala, J.; Schulz, F.; Liljeroth, P.; Foster, A. S. Sci. Adv. 2020, 6, eaay6913. doi:10.1126/sciadv.aay6913 |

| 30. | Kelley, K. P.; Ziatdinov, M.; Collins, L.; Susner, M. A.; Vasudevan, R. K.; Balke, N.; Kalinin, S. V.; Jesse, S. Small 2020, 16, 2002878. doi:10.1002/smll.202002878 |

| 31. | Wu, Y.; Fang, Y.; Wang, C.; Liu, C.; Fan, Z. Ultramicroscopy 2020, 213, 112991. doi:10.1016/j.ultramic.2020.112991 |

| 23. | Reischl, B.; Watkins, M.; Foster, A. S. J. Chem. Theory Comput. 2013, 9, 600–608. doi:10.1021/ct3008342 |

| 24. | Fukuma, T.; Reischl, B.; Kobayashi, N.; Spijker, P.; Canova, F. F.; Miyazawa, K.; Foster, A. S. Phys. Rev. B 2015, 92, 155412. doi:10.1103/physrevb.92.155412 |

| 25. | Tracey, J.; Miyazawa, K.; Spijker, P.; Miyata, K.; Reischl, B.; Canova, F. F.; Rohl, A. L.; Fukuma, T.; Foster, A. S. Nanotechnology 2016, 27, 415709. doi:10.1088/0957-4484/27/41/415709 |

| 26. | Alldritt, B.; Hapala, P.; Oinonen, N.; Urtev, F.; Krejci, O.; Federici Canova, F.; Kannala, J.; Schulz, F.; Liljeroth, P.; Foster, A. S. Sci. Adv. 2020, 6, eaay6913. doi:10.1126/sciadv.aay6913 |

| 32. | Gordon, O. M.; Hodgkinson, J. E. A.; Farley, S. M.; Hunsicker, E. L.; Moriarty, P. J. Nano Lett. 2020, 20, 7688–7693. doi:10.1021/acs.nanolett.0c03213 |

| 33. | Oxley, M. P.; Yin, J.; Borodinov, N.; Somnath, S.; Ziatdinov, M.; Lupini, A. R.; Jesse, S.; Vasudevan, R. K.; Kalinin, S. V. Mach. Learn.: Sci. Technol. 2020, 1, 04LT01. doi:10.1088/2632-2153/aba32d |

| 16. | Komiyama, M.; Tsujimichi, K.; Tazawa, K.; Hirotani, A.; Yamano, H.; Kubo, M.; Broclawik, E.; Miyamoto, A. Surf. Sci. 1996, 357–358, 222–227. doi:10.1016/0039-6028(96)00097-0 |

| 17. | Liang, Y.; Dou, J.; Bai, Q.; Wang, S.; Chen, M.; Zhao, Y.; Dong, S. Subatomic imaging of Si (001) surface by molecular dynamic simulation. In 2006 1st IEEE International Conference on Nano/Micro Engineered and Molecular Systems, Vols 1-3, International Conference on Nano-Micro Engineered and Molecular Systems NEMS; 2006; pp 1156–1159. doi:10.1109/nems.2006.334670 |

| 18. | Belikov, S.; Magonov, S. Jpn. J. Appl. Phys., Part 1 2006, 45, 2158–2165. doi:10.1143/jjap.45.2158 |

| 19. | Loske, F.; Rahe, P.; Kühnle, A. Nanotechnology 2009, 20, 264010. doi:10.1088/0957-4484/20/26/264010 |

| 20. | Pishkenari, H. N.; Meghdari, A. Curr. Appl. Phys. 2010, 10, 583–591. doi:10.1016/j.cap.2009.08.002 |

| 21. | Kheirodin, M.; Pishkenari, H. N.; Moosavi, A.; Meghdari, A. Nano 2015, 10, 1550096. doi:10.1142/s1793292015500964 |

| 22. | van der Lit, J.; Di Cicco, F.; Hapala, P.; Jelinek, P.; Swart, I. Phys. Rev. Lett. 2016, 116, 096102. doi:10.1103/physrevlett.116.096102 |

| 47. | Zhang, Y.; Li, Y.; Wang, Z.; Song, Z.; Lin, R.; Qian, J.; Yao, J. Meas. Sci. Technol. 2019, 30, 025402. doi:10.1088/1361-6501/aaf4e7 |

| 13. | Gross, L.; Mohn, F.; Moll, N.; Schuler, B.; Criado, A.; Guitian, E.; Pena, D.; Gourdon, A.; Meyer, G. Science 2012, 337, 1326–1329. doi:10.1126/science.1225621 |

| 14. | Fan, D.; Sakai, Y.; Chelikowsky, J. R. Nano Lett. 2019, 19, 5562–5567. doi:10.1021/acs.nanolett.9b02097 |

| 15. | Pavliček, N.; Schuler, B.; Collazos, S.; Moll, N.; Pérez, D.; Guitián, E.; Meyer, G.; Peña, D.; Gross, L. Nat. Chem. 2015, 7, 623–628. doi:10.1038/nchem.2300 |

| 27. | Cellini, F.; Lavini, F.; Berger, C.; de Heer, W.; Riedo, E. 2D Mater. 2019, 6, 035043. doi:10.1088/2053-1583/ab1b9f |

| 28. | Borodinov, N.; Tsai, W.-Y.; Korolkov, V. V.; Balke, N.; Kalinin, S. V.; Ovchinnikova, O. S. Appl. Phys. Lett. 2020, 116, 044103. doi:10.1063/1.5135328 |

| 29. | Huang, B.; Li, Z.; Li, J. Nanoscale 2018, 10, 21320–21326. doi:10.1039/c8nr06734a |

| 35. | Dong, C.; Loy, C. C.; He, K.; Tang, X. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. doi:10.1109/tpami.2015.2439281 |

| 44. | Candès, E.; Romberg, J. Inverse Probl. 2007, 23, 969–985. doi:10.1088/0266-5611/23/3/008 |

| 45. | Ji, S.; Xue, Y.; Carin, L. IEEE Trans. Signal Process. 2008, 56, 2346–2356. doi:10.1109/tsp.2007.914345 |

| 46. | Babacan, S. D.; Molina, R.; Katsaggelos, A. K. IEEE Trans. Image Process. 2010, 19, 53–63. doi:10.1109/tip.2009.2032894 |

| 47. | Zhang, Y.; Li, Y.; Wang, Z.; Song, Z.; Lin, R.; Qian, J.; Yao, J. Meas. Sci. Technol. 2019, 30, 025402. doi:10.1088/1361-6501/aaf4e7 |

| 48. | Zhang, Y.; Li, Y.; Song, Z.; Wang, Z.; Qian, J.; Yao, J. Beilstein J. Nanotechnol. 2019, 10, 2346–2356. doi:10.3762/bjnano.10.225 |

| 55. | Stuart, S. J.; Tutein, A. B.; Harrison, J. A. J. Chem. Phys. 2000, 112, 6472–6486. doi:10.1063/1.481208 |

| 56. | Foiles, S. M.; Baskes, M. I.; Daw, M. S. Phys. Rev. B 1986, 33, 7983–7991. doi:10.1103/physrevb.33.7983 |

| 31. | Wu, Y.; Fang, Y.; Wang, C.; Liu, C.; Fan, Z. Ultramicroscopy 2020, 213, 112991. doi:10.1016/j.ultramic.2020.112991 |

| 53. | Luo, Y.; Andersson, S. B. Micron 2020, 130, 102814. doi:10.1016/j.micron.2019.102814 |

| 54. | Dou, Z.; Qian, J.; Li, Y.; Wang, Z.; Zhang, Y.; Lin, R.; Wang, T. Ultramicroscopy 2020, 212, 112971. doi:10.1016/j.ultramic.2020.112971 |

| 51. | Goodfellow, I. J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. In Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2, Montreal, Canada, Dec 8–13, 2014; MIT Press, 2014; pp 2672–2680. doi:10.1109/vlsid.2014.109 |

| 52. | Kim, J.; Lee, J. K.; Lee, K. M. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 27–30, 2016; IEEE: Seattle, WA, 2016; pp 1646–1654. doi:10.1109/cvpr.2016.181 |

| 50. | He, K.; Zhang, X.; Ren, S.; Sun, J. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 27–30, 2016; IEEE: Las Vegas, NV, USA, 2016; pp 770–778. doi:10.1109/cvpr.2016.90 |

© 2021 Dou et al.; licensee Beilstein-Institut.

This is an Open Access article under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0). Please note that the reuse, redistribution and reproduction in particular requires that the author(s) and source are credited and that individual graphics may be subject to special legal provisions.

The license is subject to the Beilstein Journal of Nanotechnology terms and conditions: (https://www.beilstein-journals.org/bjnano/terms)